Knowing when to stop optimizing a product, feature, model, process is an incredibly valuable and often underestimated skill for any knowledge worker, from engineers and product managers to data and business analysts.

I was lucky to have the opportunity to learn this lesson very early in my career, when I was working as an electronic engineer for a company developing communication devices. We spent a lot of effort improving transmission rates and signal range so that customers could send data faster with network devices placed farther from each other without losing reception quality. After developing what could be considered a “best in class” product, we saw our market share dwindle as customers moved to competitors with products that weren’t as fast and didn’t cover as much distance but were cheaper and fully solved the customer’s problem.

Turns out we were continuing to optimize our products on dimensions of quality that were already overserved. Our customers didn’t need better transmission rates or broader signal range; they were happy with the performance levels achieved by our current version of the product. When faced with a choice of a product that exceeded their expectations and was more expensive, and a competitor’s product that was fit for purpose and much cheaper, they naturally selected the cheaper alternative that let them keep some their budget to spend in more value-adding equipment or services.

Here are a couple of additional examples that illustrate the importance of “knowing when to stop” in business and data analysis contexts:

Feature improvement

Imagine you’re working on a content management application that is used to publish blog posts to customers’ websites. The application has a feature that allows authors to label their drafts to identify the topic of the post, flag the post as ready for revision, etc. As the product manager of the application, you might be tempted to continue to improve the label feature indefinitely: allowing the ability to organize labels into categories (e.g., topics, publication stage, etc.), search for labels using auto-fill, filter posts not only by label but by more sophisticated conditions (“post has label Ready for Review but does not include the label Marketing Campaign“), and so forth.

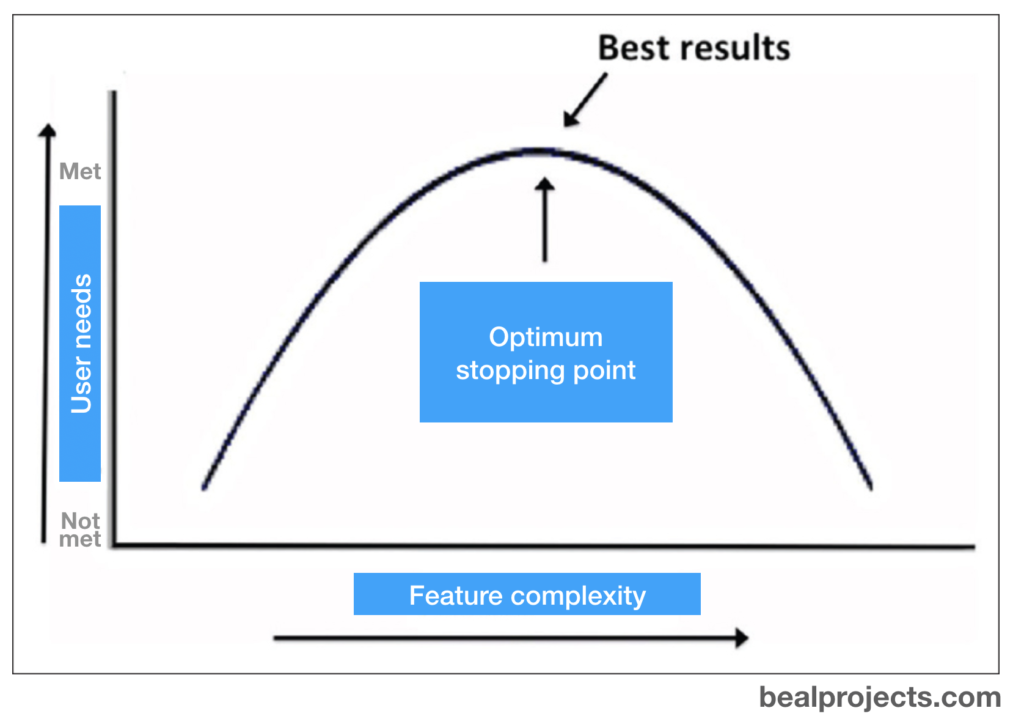

There will be a point in the improvement process where the law of diminishing returns will start to apply (when the level of return or benefit gained is less than the amount of money or energy invested to produce it). If you’re not careful, you may find yourself on the wrong side of an inverted U curve where, after a certain amount of improvement, adding more bells and whistles starts to actually hurt user satisfaction due to new barriers to users performing their routine actions of labeling posts as a result of excessive design complexity or another issue.

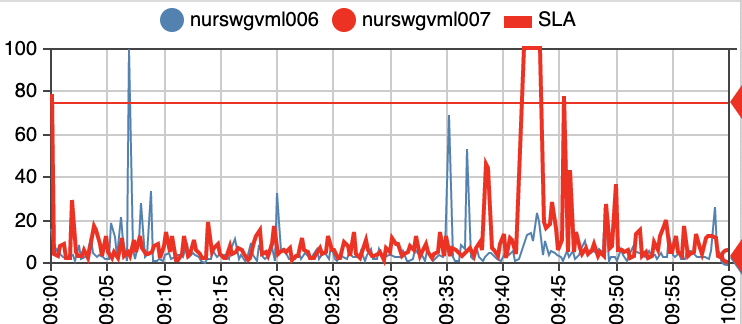

Visual analytics for streaming data

The analysis of streaming data (data that is generated continuously rather than collected in a single pass) presents a new set of challenges for the designers of visual analytics tools. The scale of the data to be processed and visualized create challenges for an interactive system to update and render information at the necessary rate.

Here the same concept of the inverted U curve applies. The human visual system is bounded by some well-understood perceptual limits. At some point, additional improvements to the frequency in which information is updated and rendered won’t be detectable by humans, and could hurt the quality of the solution by requiring degradation of data sampling and analytics to meet the enhanced visualization need. Knowing when to stop optimizing data visualization would allow us to terminate any additional computation at that optimal point without degrading the visual display’s accuracy.

How to avoid the consequences of not knowing when to stop

Now that we know what the problem is, how can we avoid it?

While merely being aware of the problem can help us avoid it, we can go a step further and adopt certain practices to prevent serious mistakes when deciding where to focus our resources.

- Make sure to ask good questions that challenge your current beliefs. Are we focusing on the opportunities we should address, or merely the opportunities we can address? How might we go looking for contradictory information to avoid taking actions that will be regretted later because of the lack of real benefit achieved?

- Use frameworks like the Kano model to better understand the limits of the functional and emotional benefits gained by continuing to embellish and enhance must-have product features, as well as to identify undesired features that can actually create negative impact.

- Recognize that most big things start small. As Jeff Bezos said, “The biggest oak starts from an acorn and if you want to do anything new, you’ve got to be willing to let that acorn grow into a little sapling and then finally into a small tree and maybe one day it will be a big business on its own.” Use the concept of minimum viable product to build the smallest thing you can build that delivers customer value (and as a bonus captures some of that value back). Ensure you’re collecting the maximum amount of validated learning about your customers, what jobs they’re trying to get done, and what outcomes they are trying to achieve. Armed with this knowledge you’ll be able to systematically and predictably identify the opportunities to target important, underserved outcomes and avoid the risk of focusing on the unimportant, overserved ones.